How an assistive-feeding robot went from picking up fruit salads to whole meals

According to data from 2010, around 1.8 million people in the U.S. can’t eat on their own. Yet training a robot to feed people presents an array of challenges for researchers. Foods come in a nearly endless variety of shapes and states (liquid, solid, gelatinous), and each person has a unique set of needs and preferences.

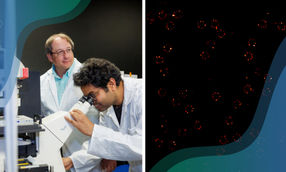

A team led by researchers at the University of Washington created a set of 11 actions a robotic arm can make to pick up nearly any food attainable by fork. This allows the system to learn to pick up new foods during one meal. Here, the robot picks up fruit.

University of Washington

A team led by researchers at the University of Washington created a set of 11 actions a robotic arm can make to pick up nearly any food attainable by fork. In tests with this set of actions, the robot picked up the foods more than 80% of the time, which is the user-specified benchmark for in-home use. The small set of actions allows the system to learn to pick up new foods during one meal.

The team presented its findings Nov. 7 at the 2023 Conference on Robotic Learning in Atlanta.

UW News talked with co-lead authors Ethan K. Gordon and Amal Nanavati — UW doctoral students in the Paul G. Allen School of Computer Science & Engineering — and with co-author Taylor Kessler Faulkner, a UW postdoctoral scholar in the Allen School, about the successes and challenges of robot-assisted feeding.

The Personal Robotics Lab has been working on robot-assisted feeding for several years. What is the advance of this paper?

Ethan K. Gordon: I joined the Personal Robotics Lab at the end of 2018 when Siddhartha Srinivasa, a professor in the Allen School and senior author of our new study, and his team had created the first iteration of its robot system for assistive applications. The system was mounted on a wheelchair and could pick up a variety of fruits and vegetables on a plate. It was designed to identify how a person was sitting and take the food straight to their mouth. Since then, there have been quite a few iterations, mostly involving identifying a wide variety of food items on the plate. Now, the user with their assistive device can click on an image in the app, a grape for example, and the system can identify and pick that up.

Taylor Kessler Faulkner: Also, we’ve expanded the interface. Whatever accessibility systems people use to interact with their phones — mostly voice or mouth control navigation — they can use to control the app.

EKG: In this paper we just presented, we've gotten to the point where we can pick up nearly everything a fork can handle. So we can’t pick up soup, for example. But the robot can handle everything from mashed potatoes or noodles to a fruit salad to an actual vegetable salad, as well as pre-cut pizza or a sandwich or pieces of meat.

In previous work with the fruit salad, we looked at which trajectory the robot should take if it’s given an image of the food, but the set of trajectories we gave it was pretty limited. We were just changing the pitch of the fork. If you want to pick up a grape, for example, the fork’s tines need to go straight down, but for a banana they need to be at an angle, otherwise it will slide off. Then we worked on how much force we needed to apply for different foods.

In this new paper, we looked at how people pick up food, and used that data to generate a set of trajectories. We found a small number of motions that people actually use to eat and settled on 11 trajectories. So rather than just the simple up-down or coming in at an angle, it’s using scooping motions, or it’s wiggling inside of the food item to increase the strength of the contact. This small number still had the coverage to pick up a much greater array of foods.

We think the system is now at a point where it can be deployed for testing on people outside the research group. We can invite a user to the UW, and put the robot either on a wheelchair, if they have the mounting apparatus ready, or a tripod next to their wheelchair, and run through an entire meal.

For you as researchers, what are the vital challenges ahead to make this something people could use in their homes every day?

EKG: We've so far been talking about the problem of picking up the food, and there are more improvements that can be made here. Then there's the whole other problem of getting the food to a person's mouth, as well as how the person interfaces with the robot, and how much control the person has over this at least partially autonomous system.

TKF: Over the next couple of years, we’re hoping to personalize the robot to different people. Everyone eats a little bit differently. Amal did some really cool work on social dining that highlighted how people’s preferences are based on many factors, such as their social and physical situations. So we’re asking: How can we get input from the people who are eating? And how can the robot use that input to better adapt to the way each person wants to eat?

Amal Nanavati: There are several different dimensions that we might want to personalize. One is the user's needs: How far the user can move their neck impacts how close the fork has to get to them. Some people have differential strength on different sides of their mouth, so the robot might need to feed them from a particular side of their mouth. There’s also an aspect of the physical environment. Users already have a bunch of assistive technologies, often mounted around their face if that's the main part of their body that's mobile. These technologies might be used to control their wheelchair, to interact with their phone, etc. Of course, we don't want the robot interfering with any of those assistive technologies as it approaches their mouth.

There are also social considerations. For example, if I’m having a conversation with someone or at home watching TV, I don't want the robot arm to come right in front of my face. Finally, there are personal preferences. For example, among users who can turn their head a little bit, some prefer to have the robot come from the front so they can keep an eye on the robot as it's coming in. Others feel like that's scary or distracting and prefer to have the bite come at them from the side.

A key research direction is understanding how we can create intuitive and transparent ways for the user to customize the robot to their own needs. We’re considering trade-offs between customization methods where the user is doing the customization, versus more robot-centered forms where, for example, the robot tries something and says, “Did you like it? Yes or no.” The goal is to understand how users feel about these different customization methods and which ones result in more customized trajectories.

What should the public understand about robot-assisted feeding, both in general and specifically the work your lab is doing?

EKG: It’s important to look not just at the technical challenges, but at the emotional scale of the problem. It’s not a small number of people who need help eating. There are various figures out there, but it’s over a million people in the U.S. Eating has to happen every single day. And to require someone else every single time you need to do that intimate and very necessary act can make people feel like a burden or self-conscious. So the whole community working towards assistive devices is really trying to help foster a sense of independence for people who have these kinds of physical mobility limitations.

AN: Even these seven-digit numbers don’t capture everyone. There are permanent disabilities, such as a spinal cord injury, but there are also temporary disabilities such as breaking your arm. All of us might face disability at some time as we age and we want to make sure that we have the tools necessary to ensure that we can all live dignified lives and independent lives. Also, unfortunately, even though technologies like this greatly improve people's quality of life, it’s incredibly difficult to get them covered by U.S. insurance companies. I think more people knowing about the potential quality of life improvement will hopefully open up greater access.

Additional co-authors on the paper were Ramya Challa, who completed this research as an undergraduate student in the Allen School and is now at Oregon State University, and Bernie Zhu, a UW doctoral student in the Allen School. This research was partially funded by the National Science Foundation, the Office of Naval Research and Amazon.